As the gaming industry evolves toward more immersive and intelligent experiences, the integration of local Large Language Models (LLMs) into next-generation gaming consoles represents the most significant technological leap since the introduction of 3D graphics. The convergence of artificial intelligence and gaming hardware promises to transform how players interact with game worlds, creating unprecedented levels of immersion through intelligent NPCs, dynamic storytelling, and adaptive gameplay experiences.

The Current State of Gaming Console AI Technology

Existing Console Capabilities and Limitations

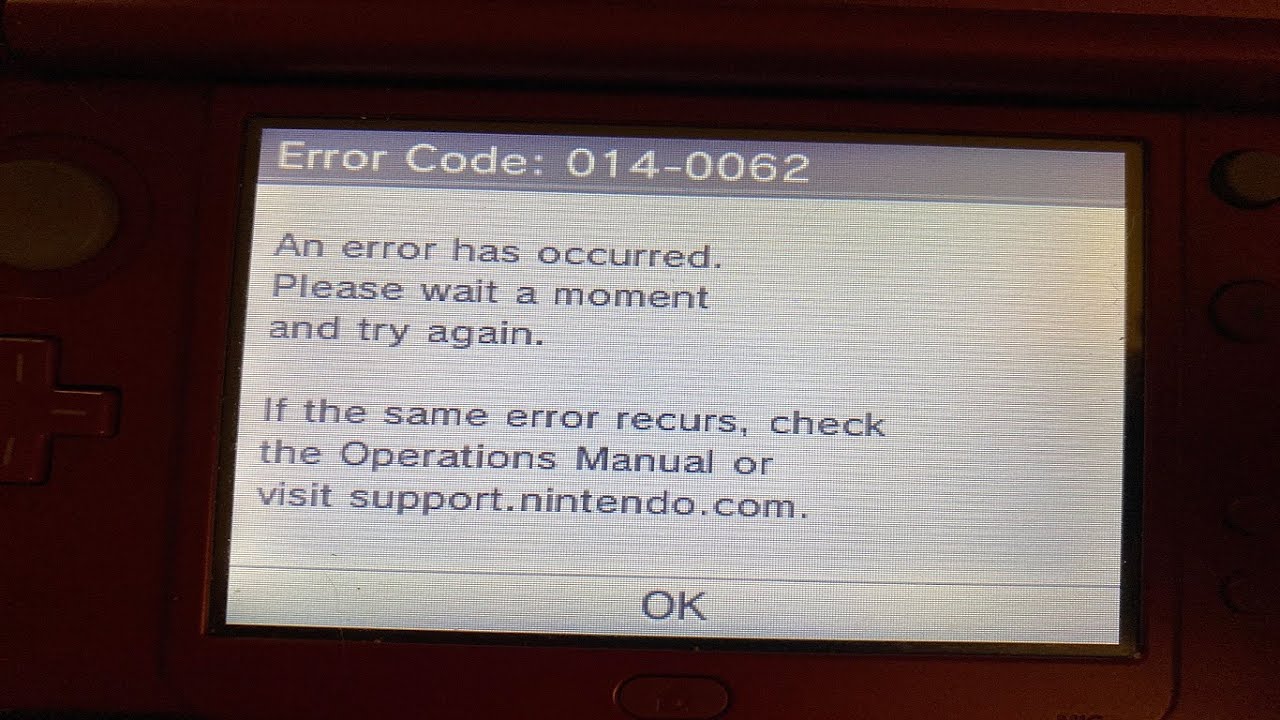

Current ninth-generation consoles, including the PlayStation 5, Xbox Series X/S, and Nintendo Switch, have made substantial improvements in processing power and graphics capabilities compared to their predecessors. The PlayStation 5 features a custom AMD Zen 2 CPU with 8 cores running at 3.5GHz and 16GB of GDDR6 RAM, while the Xbox Series X operates with similar specifications but at 3.8GHz. However, these systems lack dedicated neural processing units (NPUs) specifically designed for AI inference tasks.

The Nintendo Switch 2, recently released in June 2025, although primarily not designed to drive LLMs, marks the first major step toward AI-integrated gaming consoles with its custom NVIDIA processor featuring dedicated RT Cores and Tensor Cores. This system enables up to 4K gaming in TV mode and incorporates AI upscaling through Deep Learning Super Sampling (DLSS), demonstrating the potential for AI enhancement in gaming experiences.

Market Growth and Industry Momentum

The AI in gaming market is experiencing unprecedented growth, with projections indicating an expansion of USD 27.47 billion from 2025 to 2029 at a compound annual growth rate of 42.3%. This explosive growth reflects increasing industry investment in AI technologies, from procedural content generation to intelligent character behavior systems. Major publishers like NetEase and studios such as Niantic are already integrating generative AI-powered NPCs into their games, while Xbox has announced partnerships with Inworld AI to develop next-generation character technologies.

The Case for Local LLM Integration in Gaming Consoles

Advantages of Edge Computing for Gaming Applications

Local LLM processing offers significant advantages over cloud-based AI solutions for gaming applications, particularly in latency-sensitive scenarios. Edge computing enables real-time processing of high volumes of data with minimal latency, which is crucial for maintaining immersive gaming experiences in AR and VR environments. By processing AI inference locally rather than relying on remote servers, gaming consoles can deliver consistent performance regardless of internet connectivity quality or server load.

The privacy and data security benefits of local processing are equally compelling, as sensitive player data and gaming preferences remain on the device rather than being transmitted to external cloud servers. This approach also enables unlimited gameplay scenarios without consuming cloud-based credits or subscription fees, making AI-enhanced gaming more accessible to a broader audience.

Technical Requirements for Local LLM Implementation

Implementing local LLMs in gaming consoles requires substantial hardware upgrades compared to current-generation systems. The fundamental components needed include dedicated neural processing units capable of 50-100 TOPS of AI compute power, significantly increased system memory (24-32GB GDDR7), and specialized AI memory pools for model parameter storage.

Modern LLM inference demands careful balance between model size and performance characteristics. For gaming applications, models with 7-8 billion parameters represent the optimal trade-off between intelligence and real-time performance constraints. These models can operate effectively within the memory and processing limitations of consumer gaming hardware while delivering meaningful improvements to gameplay experiences.

Transforming NPCs: From Scripted Characters to Living Personalities

Dynamic Behavior and Contextual Awareness

Local LLMs enable NPCs to exhibit truly intelligent behavior patterns that adapt to player actions and environmental contexts in real-time. Unlike traditional scripted NPCs that follow predetermined dialogue trees and behavior patterns, AI-powered characters can engage in natural conversations, remember previous interactions, and develop unique personality traits based on their experiences within the game world.

The Character Engine technology developed by companies like Inworld AI demonstrates how multi-modal AI systems can orchestrate various aspects of NPC behavior, including speech recognition, emotional responses, and contextual decision-making. These systems enable NPCs to perceive their environment through vision and audio processing, allowing them to react appropriately to visual cues and spatial relationships within the game world.

Procedural Personality Development

Advanced AI NPCs can develop distinct personalities through machine learning algorithms that analyze player interactions and environmental factors. This procedural personality generation creates characters that feel genuinely unique rather than variations of pre-designed archetypes. NPCs can form lasting relationships with players, exhibit emotional growth, and pursue their own objectives within the game world, creating a living ecosystem of interactive characters.

The implementation of long-term memory systems allows NPCs to remember significant events, player decisions, and relationship developments across multiple gaming sessions. This persistent character development creates meaningful consequences for player actions and enables complex narrative arcs that evolve organically based on player choices.

Hardware Architecture for LLM-Enhanced Gaming Consoles

Neural Processing Unit Integration

The integration of dedicated NPUs represents the most critical hardware advancement for local LLM support in gaming consoles. Modern NPU designs, such as those found in AMD’s Ryzen AI Z2 Extreme processor, offer up to 50 TOPS of AI processing power while maintaining energy efficiency for sustained gaming sessions. These specialized processors handle AI inference tasks independently from the main CPU and GPU, preventing performance bottlenecks during intensive gaming scenarios.

The architectural separation of AI processing from traditional gaming computations enables simultaneous operation of complex LLM inference and high-fidelity graphics rendering. This parallel processing capability ensures that AI-enhanced features do not compromise frame rates or visual quality in demanding gaming scenarios.

Memory and Storage Considerations

LLM-enhanced gaming consoles require substantial improvements in both system memory and storage subsystems to accommodate model weights and inference caching. Next-generation systems will likely feature 24-32GB of high-bandwidth GDDR7 memory alongside dedicated AI memory pools using High Bandwidth Memory (HBM) technology. These memory configurations enable rapid access to model parameters while maintaining sufficient capacity for traditional gaming workloads.

Storage requirements increase dramatically with local LLM implementation, necessitating 2-4TB NVMe SSDs with transfer rates exceeding 7GB/s. High-speed storage enables rapid model loading and swapping, allowing games to utilize multiple specialized AI models for different gameplay scenarios without significant loading delays.

Applications Beyond NPC Interactions

Dynamic Content Generation and Adaptive Gameplay

Local LLMs enable sophisticated procedural content generation that extends far beyond character dialogue and behavior. Games can dynamically generate quest narratives, environmental descriptions, and even gameplay mechanics based on player preferences and historical behavior patterns. This capability creates virtually unlimited content variety while maintaining consistency with the game’s established lore and design principles.

Adaptive difficulty systems powered by local AI can analyze player performance in real-time and adjust challenge levels, enemy behavior patterns, and resource availability to maintain optimal engagement. These systems go beyond simple numerical adjustments, potentially modifying level layouts, puzzle complexity, and narrative pacing to match individual player capabilities and preferences.

Voice Command Integration and Natural Language Processing

Advanced voice recognition and natural language processing capabilities enable more intuitive player-game interactions through conversational interfaces. Players can issue complex commands, ask questions about game mechanics or lore, and engage in natural dialogue with NPCs without predetermined response options. This technology eliminates traditional menu navigation in many scenarios, creating more immersive and accessible gaming experiences.

The integration of text-to-speech synthesis with emotion modeling allows AI characters to deliver dialogue with appropriate vocal inflections and emotional nuances. This capability enhances the believability of character interactions while supporting accessibility features for players with visual impairments.

Market Implications and Industry Transformation

Competitive Advantages and Platform Differentiation

Gaming console manufacturers investing in local LLM capabilities gain significant competitive advantages in an increasingly saturated market. Sony’s PlayStation 5 Pro already incorporates AI-driven PlayStation Spectral Super Resolution for enhanced visual fidelity, while Microsoft is developing built-in AI assistants for next-generation Xbox systems. Nintendo’s Switch 2 demonstrates the viability of AI integration in portable gaming platforms, setting new standards for handheld gaming experiences.

The ability to offer AI-enhanced gaming experiences without recurring cloud service fees presents compelling value propositions for consumers. Local processing eliminates ongoing subscription costs while ensuring consistent performance regardless of internet connectivity, making AI-powered gaming accessible to broader global markets.

Developer Ecosystem and Content Creation

Local LLM capabilities democratize AI-enhanced content creation by providing game developers with powerful tools that don’t require extensive machine learning expertise. Standardized AI APIs and development frameworks enable smaller studios to incorporate sophisticated AI features that were previously exclusive to major publishers with substantial technical resources.

The emergence of AI-powered development tools accelerates content creation workflows while reducing production costs. Developers can leverage AI for asset generation, dialogue writing, and gameplay balancing, freeing creative teams to focus on innovative design concepts and player experience optimization.

Technical Challenges and Implementation Solutions

Power Management and Thermal Considerations

The integration of high-performance NPUs and increased memory capacity raises significant power consumption and thermal management challenges for gaming console design. Next-generation systems must balance AI processing capabilities with acceptable power draw and heat generation, particularly in portable gaming scenarios. Advanced power management algorithms and dynamic frequency scaling help optimize energy consumption based on real-time processing demands.

Innovative cooling solutions, including liquid cooling systems and advanced thermal interface materials, become essential for maintaining stable performance during intensive AI processing workloads. Console manufacturers must also consider the acoustic impact of enhanced cooling systems to maintain acceptable noise levels for home entertainment environments.

Model Optimization and Compression Techniques

Deploying effective LLMs within console hardware constraints requires sophisticated model optimization and compression techniques. Quantization methods reduce model precision from 32-bit to 8-bit or even 4-bit representations while maintaining acceptable inference quality for gaming applications. Knowledge distillation techniques enable the creation of smaller, specialized models that retain the capabilities of larger systems while operating within memory and processing limitations.

Dynamic model loading and swapping strategies allow games to utilize multiple specialized AI models for different scenarios without keeping all models in memory simultaneously. This approach maximizes the effective AI capabilities while working within hardware constraints of consumer gaming systems.

Future Implications and Industry Evolution

Cross-Platform Integration and Ecosystem Development

The adoption of local LLM capabilities across gaming platforms will likely drive standardization efforts for AI APIs and development frameworks. Cross-platform compatibility becomes increasingly important as players expect consistent AI-enhanced experiences across different gaming devices and ecosystems. Cloud-hybrid approaches may emerge, combining local AI processing with occasional cloud-based model updates and specialized computations.

The integration of AI capabilities into gaming consoles positions these platforms as central hubs for broader entertainment and productivity applications. Voice assistants, educational software, and creative tools can leverage the same AI processing capabilities, expanding the utility and value proposition of gaming hardware beyond traditional entertainment scenarios.

Long-term Market Transformation

The widespread adoption of local LLM technology in gaming consoles will fundamentally transform player expectations and industry standards. Games without AI-enhanced features may appear outdated or limited, creating pressure for developers to integrate intelligent systems across all gaming genres. This technological shift parallels historical transitions from 2D to 3D graphics and from single-player to online multiplayer experiences.

The gaming industry’s investment in AI technology will likely accelerate broader consumer adoption of AI-powered devices and services. As players become accustomed to intelligent, responsive gaming experiences, they will demand similar capabilities in other entertainment and productivity applications.

To Conlcude

The integration of local Large Language Models into next-generation gaming consoles represents a transformative milestone that will redefine interactive entertainment. By enabling truly intelligent NPCs, dynamic content generation, and adaptive gameplay experiences, local AI processing addresses fundamental limitations of current gaming systems while opening unprecedented creative possibilities.

The technical requirements for implementing local LLMs in gaming hardware are substantial but achievable with current and emerging technologies. Dedicated neural processing units, expanded memory configurations, and optimized storage systems provide the foundation for AI-enhanced gaming experiences that operate independently of cloud connectivity.

As the AI in gaming market continues its explosive growth trajectory, console manufacturers who successfully integrate local LLM capabilities will gain significant competitive advantages. The combination of enhanced player experiences, reduced operational costs, and expanded creative possibilities positions local AI processing as an essential feature for future gaming platforms.

The evolution toward AI-powered gaming consoles reflects broader technological trends toward edge computing and intelligent device integration. This transformation will not only revolutionize gaming experiences but also establish new standards for interactive entertainment that will influence the industry for decades to come.

You must be logged in to post a comment.